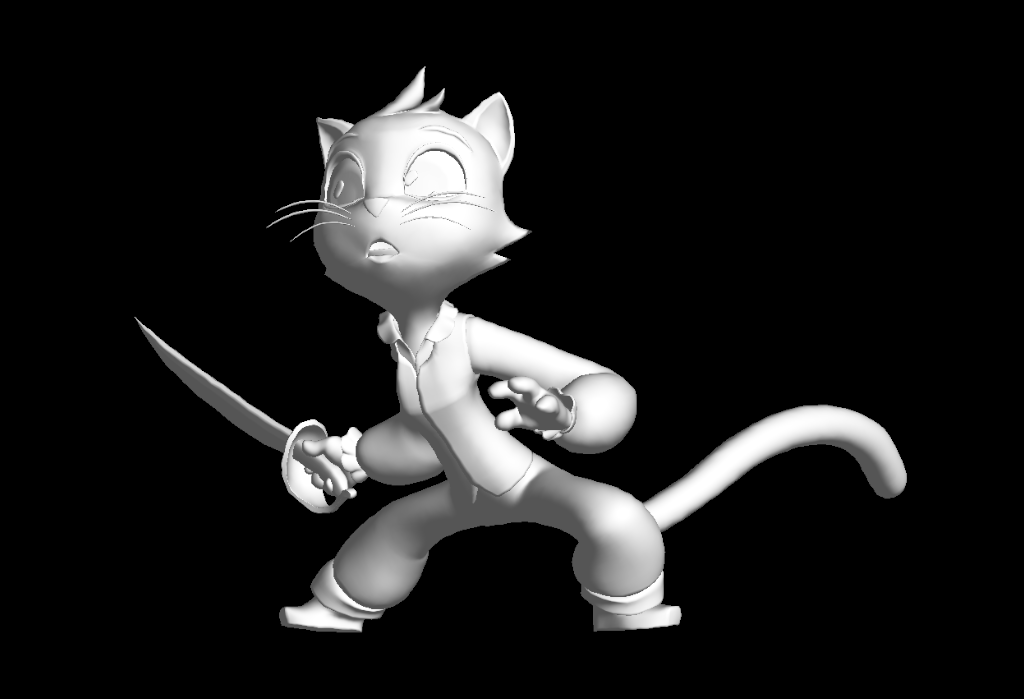

A fully-operational real-time stylized animation and rendering pipeline!

Here’s a test using a bunch of the tools and techniques I’ve been working on in Unreal lately! It’s probably pretty close to what the final short will look like:

This is all being rendered and composited completely in real time in UE, and what you’re seeing here is the direct output from UE with no modification. It actually reaches 35 fps when not in playback (when it’s capped to 24, of course)! Background art by Chris Barret.

As you may recall, the point of all this--ephemeral rigging, interpolationless animation, real-time NPR rendering--is to do animation productions that can have high-production values and full character animation, but also be relatively fast and cheap to produce. A big part of that is being able to use illustrated backgrounds, which (depending on style) can be much cheaper to produce than building a lot of CG assets, and allow a much more direct way to produce the final image.

But to make this work, you really can’t render something in a conventional, physically-based, photorealistic CG style. There are really two reasons for that: one, conventional CG against painterly or graphic backdrops would stick out like a sore thumb, and feel completely wrong stylistically. And painting backdrops in a photorealistic style would wipe out the speed advantage (and, in fact, reverse it!). Two, the very perfection of photorealistic CG demands a higher level of animation polish. Most drawn animation, even highly polished drawn animation of the classic Disney variety, includes lots of wiggles, “wall hits,” “dead” frames, and other things we CG animators struggle mightily to force out of our animation with endless rounds of polish. It’s just not as much of an issue in drawn animation, because the visually graphic style is much more forgiving. A sufficiently stylized rendering approach gives you similar advantages.

I think that this is because the graphical look of drawn artwork contains less information. The beauty of drawing and painting is that the artist is presenting only necessary information, and the viewer's own mind fills in the rest. This gives the viewer’s eye permission to elide any imperfections. It’s the same way with variable pose rate vs full frame rate animation: if you’re using a mix of 1s and 2s and flow your poses together well, the lower information density lets you get away with murder in terms of polish However, while lots of research has gone into NPR rendering over the years, very few of the NPR techniques I’m familiar with actually simplify an image very much.

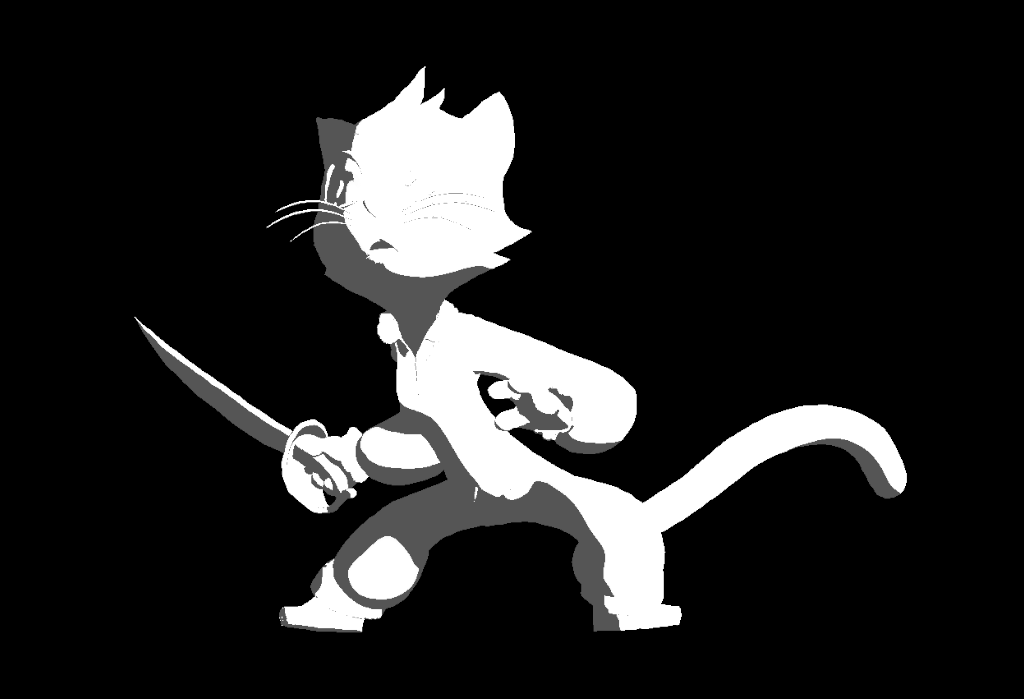

Consider the classic two-tone, created by rendering a conventional lighting pass and reducing it to two colors with a threshold:

The line of the terminator is picking up all kinds of detail from the mesh’s actual curvature and it’s ugly as sin. Sure, this image has lower information density then a full render, but it’s still got way too much, and in the worst possible places.

A number of projects have successfully overcome this issue, but most of their methods aren’t useful to me. ARC System Works used an interesting approach for their Guilty Gear games, where they directly manipulated vertex normals to simplify the shading. This works well for their anime-inspired style, where large shapes are usually broken up with details like belts, collars, and exposed pectorals, but when I tried it out I found it very difficult to produce shading that was simple enough with larger shapes and a subdivided mesh. Another approach is that taken by the short One Small Step. Their approach looks amazing, but apparently required a lot of shot-by-shot tweaking of the mesh, which is something I specifically want to avoid. Then there’s productions like Spider-verse or this League of Legends short, which have great stylized looks but don’t really aim to simplify the shading. Miles Morale’s face may have drawn lines defining his forehead wrinkles, and a dot pattern that evokes silver-age comics printing techniques, but you can still see most of the usual shading detail of a conventional render underneath it.

For The New Pioneers and the Monkey test, we used a method that smoothed the normals on the surface by averaging them with their neighbors, essentially blurring them. This worked some of the time, but a lot of the time it didn’t really produce the simple two-tone we were looking for, and we had to do a bunch of per-shot compositing trickery with animated masks to fix issues with various shots. While there will probably always be a few shots that need individual tweaks, I don’t want that to be the norm. The idea here is rapid production.

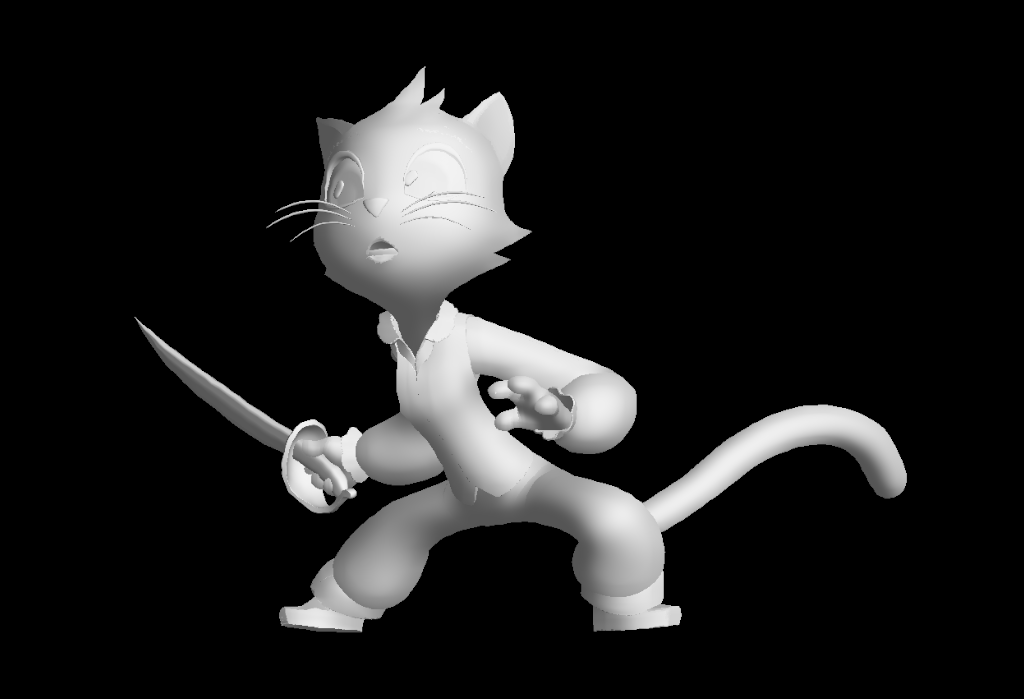

The primary method I’m using for this test is, as far as I know, novel. I worked with Morgan Robinson and Zurab Abelashvili to develop a post-process shader that blurs lighting in screen space to remove small scale details. Combined with some tricks that generate normals for spherical-ish shapes like the head, this gives a much more satisfactory simplification to my eye than blurring the vertex normals did. Here’s a comparison between a conventional two-tone and a two-tone created with this light-blurring system:

Two-tones using this system work a lot better, but I’ve actually come to think that the best use of it is for simplified continuous shading:

When combined with “fake” rim lights and the right textures, this provides just the kind of graphic look I’m going for. For those “fake” rims, I’m using a system Chris Perry and I developed for our Epic Megagrant-funded short Little Bird (more on that soon!) that uses a combination of edge detection and lighting to produce very even, graphic rim lights. I’m also using the camera projection system we developed for Little Bird for the background. Each of these systems really deserves it’s own post, and I’m going to be going through them one by one in future posts.

This is the first time I’ve felt like I had all the tools I needed to make this kind of production work. There’s still important tooling and automation missing from the pipeline that you’d really need to make it scalable for a large project, but the real unknowns have been tackled. Everything is proceeding as I have foreseen.